· AIs · 4 min read

ChatGPT is bullshitting

Bullshit can pose significant risks for organizations that depend on them too much

Main Points:

Harry Frankfurt’s Ideas on Bullshit: In his 2005 essay “On Bullshit,” American philosopher Harry Frankfurt distinguishes between lying and bullshitting based on intent, noting that a liar seeks to deceive while a bullshitter aims to create an impression without caring about the truth. This indifference to truth can lead to conversations that emphasize style over accuracy, which Frankfurt warns is harmful. His ideas are particularly relevant today in the context of social media and rapid information sharing, highlighting the need for critical thinking about communication and ethical issues.

ChatGPT and Hallucination: The term “hallucination” is often used to describe ChatGPT’s incorrect answers, but it can be misleading. “Bullshit” is a more fitting term, as it reflects the AI’s lack of concern for truth when generating responses based on training data patterns.

Implications for Organizations: Companies using ChatGPT must critically evaluate its output, as over-reliance on the tool without verifying accuracy can lead to serious consequences. Careful assessment of generated information is essential for sound decision-making.

Let’s begin by

talking about the late American philosopher Harry Frankfurt and the word “bullshit”.

Harry Frankfurt is an American philosopher known for his ideas about “bullshit.” In his essay “On Bullshit,” published in 2005, he explains the difference between lying and bullshitting based on what the speaker intends.

1. Lying vs. Bullshitting

A liar tries to trick people by saying something false while pretending it’s true. A bullshitter, on the other hand, doesn’t care about the truth; they just want to make a certain impression or persuade others, no matter if what they say is accurate or not.

2. Indifference to Truth

The main feature of bullshit is that the person making the statement doesn’t care whether it’s true or false. This can lead to conversations that focus more on how something sounds rather than whether it is true.

3. Implications for Communication

Frankfurt points out that when people prioritize persuasion over truth, it can be harmful. He believes that understanding bullshit is important for dealing with public discussions today.

4. Cultural Relevance

Frankfurt’s ideas are especially important now, with social media and quick information sharing making it hard to tell what is true. His work encourages us to think critically about how we communicate and the ethical issues related to what we say.

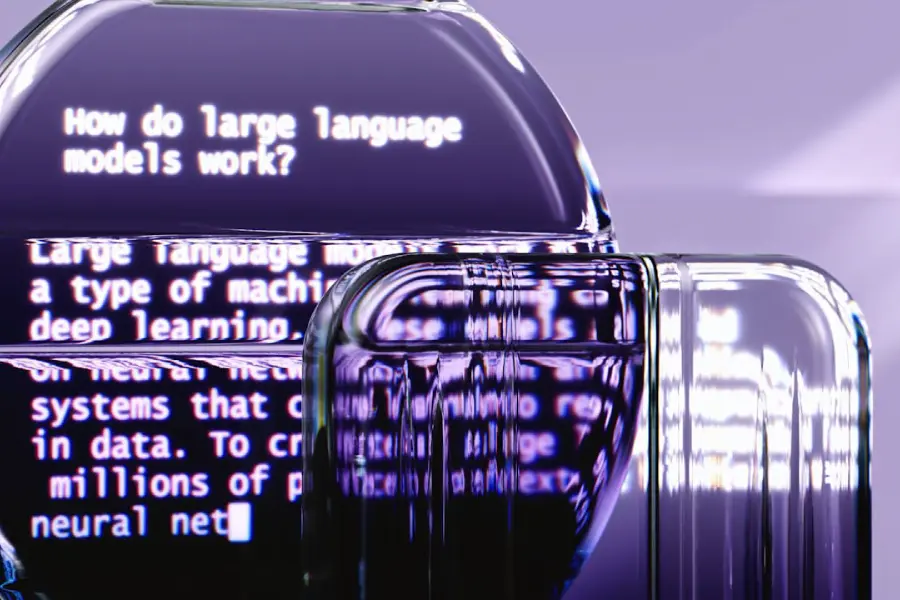

Is ChatGPT hallucinating?

ChatGPT is an AI tool that sometimes gives wrong or confusing answers, which people often call “hallucinations.” However, this word can be misleading because it suggests that the AI is trying to see or understand things. A better word to use is “bullshit,” as it describes how the AI produces answers without caring about whether they are true or accurate. This term helps us understand that ChatGPT doesn’t intend to deceive; it just generates text based on patterns in its training data, even if those answers are not correct.

What does it mean for us?

There are significant potential future implications, especially since many companies have started using ChatGPT to enhance their operations. Over-relying on this tool without verifying the reliability and accuracy of its responses can lead to serious consequences. It is crucial for organizations to critically assess the information generated by ChatGPT to avoid potential pitfalls and ensure sound decision-making. For instance, a marketing team might create campaign strategies based on ChatGPT’s suggestions, only to find that the data it used was outdated or incorrect, leading to ineffective marketing efforts and wasted resources.

Additionally, consider the healthcare industry, where professionals might use ChatGPT to generate patient care plans or interpret medical guidelines. If healthcare providers rely on ChatGPT’s output without validating the information, they risk creating plans that may overlook critical health considerations or suggest inappropriate treatments. Such mistakes could lead to serious patient harm and erode trust in healthcare providers. As organizations increasingly integrate AI tools into their workflows, the importance of thorough verification and critical thinking becomes paramount in maintaining credibility and achieving successful outcomes.

References:

- Hicks, Michael & Humphries, James & Slater, Joe. (2024). ChatGPT is bullshit. Ethics and Information Technology. 26. 1-10. 10.1007/s10676-024-09775-5.